I’ve started encountering a problem that I should use some assistance troubleshooting. I’ve got a Proxmox system that hosts, primarily, my Opnsense router. I’ve had this specific setup for about a year.

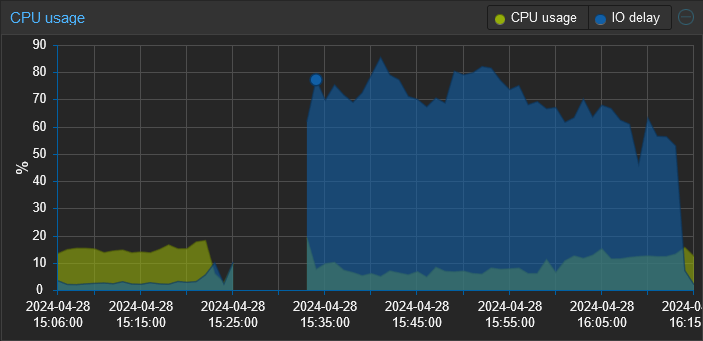

Recently, I’ve been experiencing sluggishness and noticed that the IO wait is through the roof. Rebooting the Opnsense VM, which normally only takes a few minutes is now taking upwards of 15-20. The entire time my IO wait sits between 50-80%.

The system has 1 disk in it that is formatted ZFS. I’ve checked dmesg, and the syslog for indications of disk errors (this feels like a failing disk) and found none. I also checked the smart statistics and they all “PASSED”.

Any pointers would be appreciated.

Edit: I believe I’ve found the root cause of the change in performance and it was a bit of shooting myself in the foot. I’ve been experimenting with different tools for log collection and the most recent one is a SIEM tool called Wazuh. I didn’t realize that upon reboot it runs an integrity check that generates a ton of disk I/O. So when I rebooted this proxmox server, that integrity check was running on proxmox, my pihole, and (I think) opnsense concurrently. All against a single consumer grade HDD.

Thanks to everyone who responded. I really appreciate all the performance tuning guidance. I’ve also made the following changes:

- Added a 2nd drive (I have several of these lying around, don’t ask) converting the zfs pool into a mirror. This gives me both redundancy and should improve read performance.

- Configured a 2nd storage target on the same zpool with compression enabled and a 64k block size in proxmox. I then migrated the 2 VMs to that storage.

- Since I’m collecting logs in Wazuh I set Opnsense to use ram disks for /tmp and /var/log.

Rebooted Opensense and it was back up in 1:42 min.

Upgrading a ZFS pool itself shouldn’t make a system unbootable even if an rpool (root pool) exists on it.

That could only happen if the upgrade took a shit during a power outage or something like that. The upgrade itself usually only takes a few seconds from the command line.

If it makes you feel better I upgraded mine with an rpool on it and it was painless. I do have a everything backed up tho so I rarely worry. However ai understand being hesitant.

I’m referring to this.

$ sudo proxmox-boot-tool status Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace.. System currently booted with legacy bios 8357-FBD5 is configured with: grub (versions: 6.5.11-7-pve, 6.5.13-5-pve, 6.8.4-2-pve)Unless I’m misunderstanding the guidance.

It looks like you are using legacy bios. mine is using uefi with a zfs rpool

proxmox-boot-tool status Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace.. System currently booted with uefi 31FA-87E2 is configured with: uefi (versions: 6.5.11-8-pve, 6.5.13-5-pve)However, like with everything a method always exists to get it done. Or not if you are concerned.

If you are interested it would look like…

Pool Upgrade

Confirm Upgrade

sudo zpool statusRefresh boot config

Confirm Boot configuration

cat /boot/grub/grub.cfgYou are looking for directives like this to see if they are indeed pointing at your existing rpool

root=ZFS=rpool/ROOT/pve-1 boot=zfs quiethere is my file if it helps you compare…

# # DO NOT EDIT THIS FILE # # It is automatically generated by grub-mkconfig using templates # from /etc/grub.d and settings from /etc/default/grub # ### BEGIN /etc/grub.d/000_proxmox_boot_header ### # # This system is booted via proxmox-boot-tool! The grub-config used when # booting from the disks configured with proxmox-boot-tool resides on the vfat # partitions with UUIDs listed in /etc/kernel/proxmox-boot-uuids. # /boot/grub/grub.cfg is NOT read when booting from those disk! ### END /etc/grub.d/000_proxmox_boot_header ### ### BEGIN /etc/grub.d/00_header ### if [ -s $prefix/grubenv ]; then set have_grubenv=true load_env fi if [ "${next_entry}" ] ; then set default="${next_entry}" set next_entry= save_env next_entry set boot_once=true else set default="0" fi if [ x"${feature_menuentry_id}" = xy ]; then menuentry_id_option="--id" else menuentry_id_option="" fi export menuentry_id_option if [ "${prev_saved_entry}" ]; then set saved_entry="${prev_saved_entry}" save_env saved_entry set prev_saved_entry= save_env prev_saved_entry set boot_once=true fi function savedefault { if [ -z "${boot_once}" ]; then saved_entry="${chosen}" save_env saved_entry fi } function load_video { if [ x$feature_all_video_module = xy ]; then insmod all_video else insmod efi_gop insmod efi_uga insmod ieee1275_fb insmod vbe insmod vga insmod video_bochs insmod video_cirrus fi } if loadfont unicode ; then set gfxmode=auto load_video insmod gfxterm set locale_dir=$prefix/locale set lang=en_US insmod gettext fi terminal_output gfxterm if [ "${recordfail}" = 1 ] ; then set timeout=30 else if [ x$feature_timeout_style = xy ] ; then set timeout_style=menu set timeout=5 # Fallback normal timeout code in case the timeout_style feature is # unavailable. else set timeout=5 fi fi ### END /etc/grub.d/00_header ### ### BEGIN /etc/grub.d/05_debian_theme ### set menu_color_normal=cyan/blue set menu_color_highlight=white/blue ### END /etc/grub.d/05_debian_theme ### ### BEGIN /etc/grub.d/10_linux ### function gfxmode { set gfxpayload="${1}" } set linux_gfx_mode= export linux_gfx_mode menuentry 'Proxmox VE GNU/Linux' --class proxmox --class gnu-linux --class gnu --class os $menuentry_id_option 'gnulinux-simple-/dev/sdc3' { load_video insmod gzio if [ x$grub_platform = xxen ]; then insmod xzio; insmod lzopio; fi insmod part_gpt echo 'Loading Linux 6.5.13-5-pve ...' linux /ROOT/pve-1@/boot/vmlinuz-6.5.13-5-pve root=ZFS=/ROOT/pve-1 ro root=ZFS=rpool/ROOT/pve-1 boot=zfs quiet echo 'Loading initial ramdisk ...' initrd /ROOT/pve-1@/boot/initrd.img-6.5.13-5-pve } submenu 'Advanced options for Proxmox VE GNU/Linux' $menuentry_id_option 'gnulinux-advanced-/dev/sdc3' { menuentry 'Proxmox VE GNU/Linux, with Linux 6.5.13-5-pve' --class proxmox --class gnu-linux --class gnu --class os $menuentry_id_option 'gnulinux-6.5.13-5-pve-advanced-/dev/sdc3' { load_video insmod gzio if [ x$grub_platform = xxen ]; then insmod xzio; insmod lzopio; fi insmod part_gpt echo 'Loading Linux 6.5.13-5-pve ...' linux /ROOT/pve-1@/boot/vmlinuz-6.5.13-5-pve root=ZFS=/ROOT/pve-1 ro root=ZFS=rpool/ROOT/pve-1 boot=zfs quiet echo 'Loading initial ramdisk ...' initrd /ROOT/pve-1@/boot/initrd.img-6.5.13-5-pve } menuentry 'Proxmox VE GNU/Linux, with Linux 6.5.13-5-pve (recovery mode)' --class proxmox --class gnu-linux --class gnu --class os $menuentry_id_option 'gnulinux-6.5.13-5-pve-recovery-/dev/sdc3' { load_video insmod gzio if [ x$grub_platform = xxen ]; then insmod xzio; insmod lzopio; fi insmod part_gpt echo 'Loading Linux 6.5.13-5-pve ...' linux /ROOT/pve-1@/boot/vmlinuz-6.5.13-5-pve root=ZFS=/ROOT/pve-1 ro single root=ZFS=rpool/ROOT/pve-1 boot=zfs echo 'Loading initial ramdisk ...' initrd /ROOT/pve-1@/boot/initrd.img-6.5.13-5-pve } menuentry 'Proxmox VE GNU/Linux, with Linux 6.5.11-8-pve' --class proxmox --class gnu-linux --class gnu --class os $menuentry_id_option 'gnulinux-6.5.11-8-pve-advanced-/dev/sdc3' { load_video insmod gzio if [ x$grub_platform = xxen ]; then insmod xzio; insmod lzopio; fi insmod part_gpt echo 'Loading Linux 6.5.11-8-pve ...' linux /ROOT/pve-1@/boot/vmlinuz-6.5.11-8-pve root=ZFS=/ROOT/pve-1 ro root=ZFS=rpool/ROOT/pve-1 boot=zfs quiet echo 'Loading initial ramdisk ...' initrd /ROOT/pve-1@/boot/initrd.img-6.5.11-8-pve } menuentry 'Proxmox VE GNU/Linux, with Linux 6.5.11-8-pve (recovery mode)' --class proxmox --class gnu-linux --class gnu --class os $menuentry_id_option 'gnulinux-6.5.11-8-pve-recovery-/dev/sdc3' { load_video insmod gzio if [ x$grub_platform = xxen ]; then insmod xzio; insmod lzopio; fi insmod part_gpt echo 'Loading Linux 6.5.11-8-pve ...' linux /ROOT/pve-1@/boot/vmlinuz-6.5.11-8-pve root=ZFS=/ROOT/pve-1 ro single root=ZFS=rpool/ROOT/pve-1 boot=zfs echo 'Loading initial ramdisk ...' initrd /ROOT/pve-1@/boot/initrd.img-6.5.11-8-pve } } ### END /etc/grub.d/10_linux ### ### BEGIN /etc/grub.d/20_linux_xen ### ### END /etc/grub.d/20_linux_xen ### ### BEGIN /etc/grub.d/20_memtest86+ ### ### END /etc/grub.d/20_memtest86+ ### ### BEGIN /etc/grub.d/30_os-prober ### ### END /etc/grub.d/30_os-prober ### ### BEGIN /etc/grub.d/30_uefi-firmware ### menuentry 'UEFI Firmware Settings' $menuentry_id_option 'uefi-firmware' { fwsetup } ### END /etc/grub.d/30_uefi-firmware ### ### BEGIN /etc/grub.d/40_custom ### # This file provides an easy way to add custom menu entries. Simply type the # menu entries you want to add after this comment. Be careful not to change # the 'exec tail' line above. ### END /etc/grub.d/40_custom ### ### BEGIN /etc/grub.d/41_custom ### if [ -f ${config_directory}/custom.cfg ]; then source ${config_directory}/custom.cfg elif [ -z "${config_directory}" -a -f $prefix/custom.cfg ]; then source $prefix/custom.cfg fi ### END /etc/grub.d/41_custom ###You can see the lines by the linux sections.

Thanks I may give it a try if I’m feeling daring.